Welcome to Furiosa Docs#

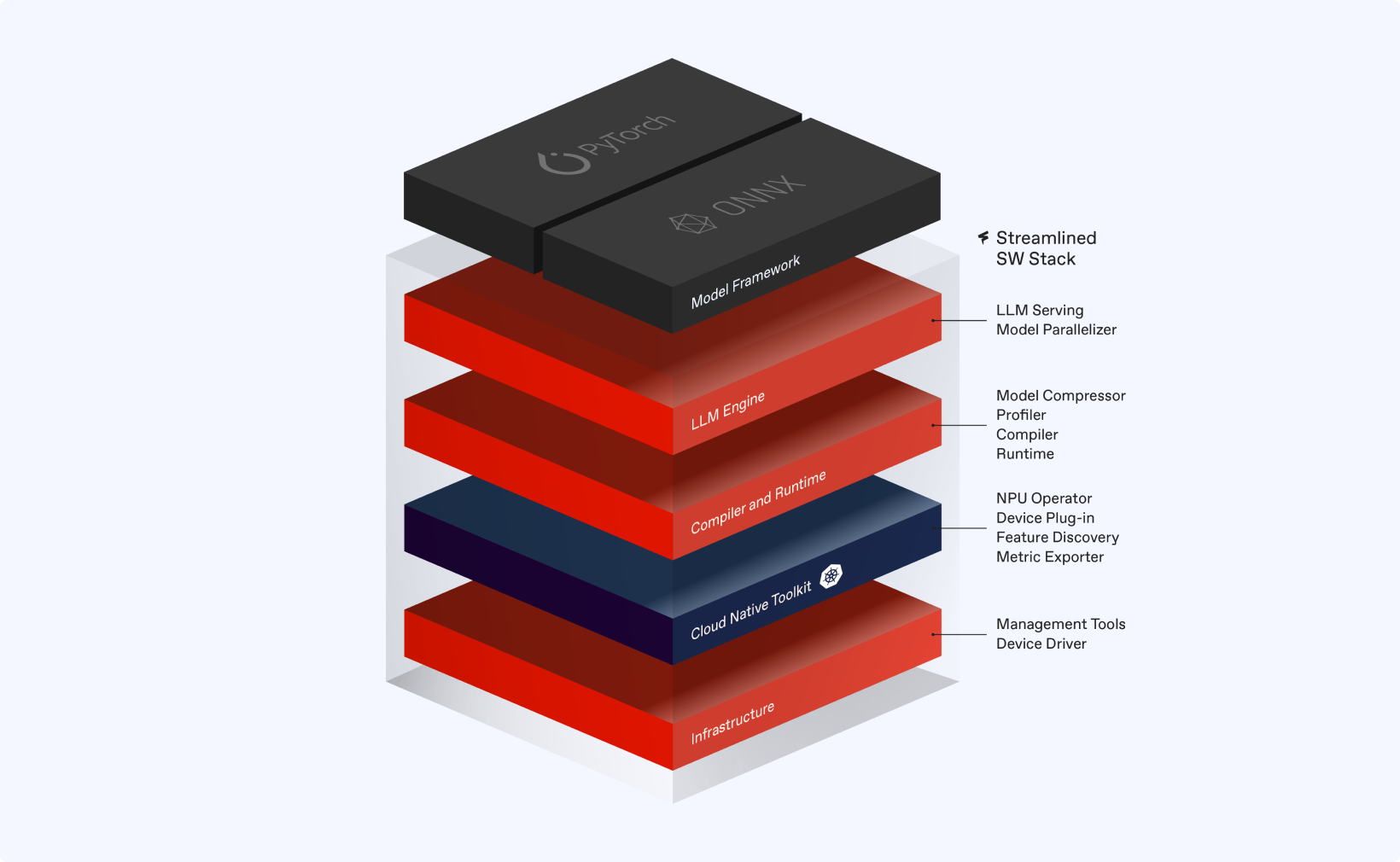

Welcome! FuriosaAI offers a streamlined software stack designed for deep learning model inference on FuriosaAI NPUs. This guide covers the entire workflow for creating inference applications, starting from a PyTorch model, through model quantization, and model serving and deployment.

Warning

This document is based on the Furiosa SDK 2025.2.0 (beta 1) version. The features and APIs described herein are subject to change in the future.

Stay up to date with the newest features, improvements, and fixes in the latest release.

Furiosa-LLM is a high-performance inference engine for LLM models. This document explains how to install and use Furiosa-LLM.

See what’s ahead for FuriosaAI with our planned releases and upcoming features. Stay informed on development progress and key milestones.

Pre-optimized and pre-compiled models for FuriosaAI NPUs are available on the Hugging Face Hub. Check out the latest models and their capabilities.

Overview#

FuriosaAI RNGD: RNGD Hardware Specification, and features

FuriosaAI’s Software Stack: An overview of the FuriosaAI software stack

Supported Models: A list of supported models

What’s New: New features and changes in the latest release

Roadmap: The future roadmap of FuriosaAI Software Stack

Getting Started#

Installing Prerequisites: How to install the prerequisites for FuriosaAI Software Stack

Upgrading FuriosaAI’s Software: How to upgrade the FuriosaAI Software Stack

Furiosa-LLM#

Furiosa-LLM: An introduction to Furiosa-LLM

OpenAI-Compatible Server: More details about the OpenAI-compatible server and its features

Model Preparation: How to prepare LLM models to be served by Furiosa-LLM

Model Parallelism: Tensor/Pipeline/Data parallelism in Furiosa-LLM

Building Model Artifacts By Examples: A guide to building model artifacts through examples

API Reference: The Python API reference for Furiosa-LLM

Examples: Examples of using Furiosa-LLM

Deploying Furiosa-LLM on Kubernetes: A guide to deploying Furiosa-LLM on Kubernetes

Cloud Native Toolkit#

Cloud Native Toolkit: An overview of the Cloud Native Toolkit

Container Support: An overview of Container Support

Kubernetes Plugins: An overview of the Kubernetes Support

Device Management#

Furiosa SMI CLI: A command line utility for managing FuriosaAI NPUs

Furiosa SMI Library: A library for managing FuriosaAI NPUs

Tutorials and Examples#

FuriosaAI SDK CookBook: A collection of OSS projects for AI-driven solutions using FuriosaAI NPUs.